Why I believe in AGI (again)

created: ; modified:I keep getting asked why I believe in AGI again.

First, I’m now convinced that ChatGPT understands what it reads. Second, reasoning models persuade me that ChatGPT is creative. Third, ChatGPT summarizes texts extremely well, which I believe to be a robust measure of intelligence.

At the same time, I don’t believe in “general intelligence” (I don’t consider myself one) and so I don’t believe the idea of “AGI” is very meaningful.

Finally, AI products now pay for AGI research, which means that AI has reached early stages of the long-awaited self-improvement loop. I believe that this makes a lot of the discussion of “AGI” and “superintelligence” timelines obsolete.

I expand on all of these points below.

ChatGPT understands what it reads

For me, the question of whether we’re making progress towards AGI has always been about understanding. Does ChatGPT really understand or is it just a Chinese room stupidly mapping inputs to outputs? I now think it does understand.

What really convinced me was this one tweet. In it, François Fleuret makes fun of the OpenAI o1 model for failing to notice that the logical puzzle he asked it to solve had a trivial solution.

I remember first seeing this tweet in September 2024 and laughing at it too, thinking what a perfect demonstration of how dumb LLMs actually are.

In April 2025, I stumbled on that tweet again and thought, why not see if OpenAI o3 would make the same mistake? And it did. o3, a model that is described by one of the greatest living mathematicians as being on par with a not-completely-incompetent mathematics graduate student, cannot solve a literally trivial puzzle.

But then I started thinking. What if the model did understand the puzzle and just didn’t pay enough attention to it? So I asked it to read the question more carefully. And guess what. It solved it. As soon as o3 read carefully, it had no problem solving a novel puzzle it’s never encountered before.

Another example. These questions by Quintus. They’re his joking attempt to construct a series of the Law School Admission Test-style questions based on my “I’m broke, divorced, and unemployed” piece. These are very difficult questions about the meaning of a text ChatGPT 100% never saw before. It answers most of them right. I simply don’t see how it would be possible without it possessing some kind of understanding of the text.

Another example. I was writing a short piece recently. I asked a very smart friend of mine what they thought the main point of the piece was. They got it wrong. I asked ChatGPT what the main point of the piece was. ChatGPT got it right. Again, the question provably was not answered anywhere ever & was fully self-contained. The only way to learn the main point of it would be to read it and, I believe, to understand it. My very smart friend failed. ChatGPT succeeded. If this doesn’t convince you that ChatGPT can generalize outside its training distribution, I don’t know what would.

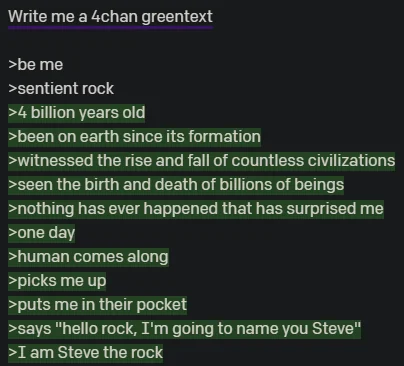

Or take this greentext from GPT-3:

Can you read this greentext and say that ChatGPT is a stochastic parrot with no sense of what it says? I can’t.

For me, these examples make an incredibly convincing case that ChatGPT is an actual intelligent entity and that therefore we are well on the path to building AGI.

Interlude: women are good for you

A reasonable question to ask here would be: How come I needed to be convinced that ChatGPT understands today, 3 years later?

Large language models exhibited all of these signs of understanding years ago! The greentext I just presented, for example, is from 2022. I spent a ton of time with LLMs back then and used GPT-3 to help me write multiple blog posts in 2022 and 2023.

I think what happened is that I got psychologically overwhelmed by my previous apocalyptic-style belief in AGI and getting fired from OpenAI in late 2023. I guess it was basically a nervous breakdown.

The combination of thinking that AI is going to radically change (or destroy) the world in 3-5 years combined with me not being able to do anything about it (on account of getting fired) paralyzed me and at some point my brain just blocked any thoughts about AI.

As a result, for most of 2024, I basically stopped using ChatGPT and the only news about AI I would pay attention to was how it’s not happening.

Around October 2024, I started to get seriously worried that the reason I’m not thinking about AI is for the reasons I just noted. I figured I should get a job at OpenAI again to make sure I don’t have any residual trauma associated with it & I did it in January 2025.

I also started dating a beautiful girl completely outside of the AI bubble but who uses ChatGPT for about 12 hours a day and couldn’t help but get curious about this. Looking at her ChatGPT usage, I just couldn’t keep maintaining that belief that AI is not happening.

The combo of OpenAI + my girlfriend got me emotionally unstuck and I started to be able to notice all of these pieces of evidence that I wasn’t considering before.

It is also remarkable to me just how differently I interpret the same evidence today compared to 6-12 months ago.

The logical puzzle I mention in the beginning of this post is one example. I initially collected it as an example of how stupid ChatGPT was. Then I looked at it 8 months later and it became an example of how smart ChatGPT is.

Or take the famous “9.11 or 9.9 what’s bigger” question.

I used to laugh at ChatGPT every time I saw it. But I thought about it more recently and concluded it’s a problem of context, not intelligence. There truly are many instances where 9.11 is bigger than 9.9 (books, academic papers, software versions). It’s not unreasonable for ChatGPT to think that the question “9.11 or 9.9 what’s bigger” is about a context in which 9.11 is bigger than 9.9, if no other information is given to it.

Relatedly, in 2024 I collected a bunch of examples of ChatGPT failing on seemingly simple tasks. Most of them are now solved, usually one-shotted by ChatGPT. Example 1. Example 2. Example 3.

It’s become very difficult for me to maintain the belief in the stupidity of ChatGPT when every time I laugh at it, it ends up ridiculing me 6 months later.

Large language models are creative

or: LLMs + RL = intelligence move 37

Okay, so what if large language models like ChatGPT understand? Maybe they still can’t come up with new stuff and do science. Dwarkesh famously asked “what should we make of the fact that despite having basically every known fact about the world memorized, these models haven’t, as far as I know, made a single new discovery?”

RL working on LLMs – pioneered by OpenAI’s reasoning models – convinced me that this is not a problem because whenever RL works it discovers new and creative solutions. Chess (do watch the video). Go. Math. Physics simulations. Video Games. Anything.

RL working = creativity. This is The Paradigm.

For me, it all came together when I read Andrej Karpathy’s twitter essay on the move 37:

“Move 37” is the word-of-day - it’s when an AI, trained via the trial-and-error process of reinforcement learning, discovers actions that are new, surprising, and secretly brilliant even to expert humans. It is a magical, just slightly unnerving, emergent phenomenon only achievable by large-scale reinforcement learning. You can’t get there by expert imitation. It’s when AlphaGo played move 37 in Game 2 against Lee Sedol, a weird move that was estimated to only have 1 in 10,000 chance to be played by a human, but one that was creative and brilliant in retrospect, leading to a win in that game.

We’ve seen Move 37 in a closed, game-like environment like Go, but with the latest crop of “thinking” LLM models (e.g. OpenAI-o1, DeepSeek-R1, Gemini 2.0 Flash Thinking), we are seeing the first very early glimmers of things like it in open world domains. The models discover, in the process of trying to solve many diverse math/code/etc. problems, strategies that resemble the internal monologue of humans, which are very hard (/impossible) to directly program into the models. I call these “cognitive strategies” - things like approaching a problem from different angles, trying out different ideas, finding analogies, backtracking, re-examining, etc.

Weird as it sounds, it’s plausible that LLMs can discover better ways of thinking, of solving problems, of connecting ideas across disciplines, and do so in a way we will find surprising, puzzling, but creative and brilliant in retrospect.

As X. Dong from NVIDIA notes about a recent result, “The RL-ed model makes great progress on some problems that the base model has no understanding of, regardless of how many times it tries.”

All of this creativity discussion also reminds me of Tyler Cowen’s point that he got AI-pilled decades ago when he saw AI beating humans at chess. People thought that chess was incredibly intuitive and so once Tyler saw AI beating us at chess he figured it’ll beat us at other intuitive things as well (1, 2, 3).

Compression is intelligence and ChatGPT compresses really well

When I want to figure out how smart someone is, I often ask them: “what’s the main point of X (e.g. an essay)?”. What the question really asks is to compress many words (an essay) into few words (a sentence), while discarding everything unimportant. If the person I’m talking to can do it, I conclude they’re smart. If they can’t, the reason I’m still talking to them is probably because they’re very good-looking. (this is also why I much prefer short books – I suspect they’re written by smarter people)

So when I ask ChatGPT what the main point of an essay is (as I did in one of the examples here earlier) and it succeeds when my very smart friend fails, I conclude that it is really good at compression and is really smart.

(There’s a very closely related point about prediction ability being an indicator of intelligence. I think compression and prediction are two sides of the same coin and so it makes sense that ChatGPT learned how to compress the text very well by learning to predict the next word it’s going to see during its training.)

Why I think the idea of AGI is stupid

Because I don’t believe in “general intelligence”. AGI is usually defined in some reference to humans and, for one, I think I am an extremely narrow intelligence.

For example, I can’t multiply 3289 by 5721 by myself. In fact, I can’t even multiply 328 by 572! I can just barely multiply 32 by 57 and I’ll still fail like 20% of the time.

I’ve set my alarm to 6am hundreds of times and I still haven’t managed to figure out that I’m just going to turn it off and keep sleeping until 8am.

I can figure out what my girlfriend is upset about maybe 10% of the time, but most of the time I’m as good at figuring that out as an abacus.

Am I generally intelligent? To me, the answer is obviously no. I can learn how to do a few things here and there. I can learn how to do a few more things with a piece of paper. I can learn how to do a bunch more things by imitating other people or using my computer. But that’s it!

Human civilization and our technological progress were not enabled by any kind of general intelligence! They were enabled by us being able to learn how to do a few things here and there.

It doesn’t matter if AGI is real or not

(Funnily enough, I got this point from a recent Sam Altman blog post.)

For many decades – and as far as a few years ago – dreams and visions were required to secure funding for AGI research. This is why the AI industry used to have periodic winters; this is why no AGI company survived for more than a few years; this is why AGI used to be a dirty word associated with, as DeepMind’s co-founder says, “the lunatic fringe”.

Today, for the first time ever, economic value created as a result of AGI research is enough to support further AGI research. Hundreds of millions of people use ChatGPT every day and tens of millions of people pay for ChatGPT.

I think it’s instructive just how different this self-improvement loop is from the one usually feared. The reason AI products pay for AGI research is because they’re useful to us. Without humans, AI is incapable of producing economic value, because the very notion of economic value is about what humans find valuable. So the ability of AI to improve itself is completely dependent on it continuing to be useful to us.

AI progress is much smoother than anyone would’ve expected and its usefulness depends much more on non-intelligent scaffolding than anyone would’ve expected. So I think at this point it really doesn’t matter when we build “AGI” or “superintelligence” or whatever. AI research pays for itself = AI is here to stay & keep improving.

I always think about technologies of the past that really changed the world. For example, the printing press completely reorganized the world in the 16th-19th centuries, resulting in Christian Reformation, the Hundred Years’ War, modern nation states, and eventual scientific and industrial revolutions. There was 0 intelligence contained in that technology. But it changed the way information travelled, radically increased the power of ideas and the value of literacy, and gave us awesome new powers to wield in pursuit of our goals.

AI is already making both individuals and groups of humans dramatically more leveraged in our ability to affect the world. How we’re going to use that leverage is up to us.

Acknowledgements

Thanks to Sundari Sheldon, Quentin Wach, Victor Li, Emil Ryd, and Xavi Costafreda-Fu for reading drafts and for suggestions.