The Effects on Cognition of Sleeping 4 Hours per Night for 12-14 Days: a Pre-Registered Self-Experiment

created: ; modified:Discuss this study on my forum or see discussion on Hacker News (>400 points, >350 comments).

Abstract

I slept 4 hours a night for 14 days and didn’t find any effects on cognition (assessed via Psychomotor Vigilance Task, a custom first-person shooter scenario, and SAT). I’m a 22-year-old male and normally I sleep 7-8 hours.

I was fully alert (very roughly) 85% of the time I was awake, moderately sleepy 10% of the time I was awake, and was outright falling asleep 5% of the time. I was able to go from “falling asleep” to “fully alert” at all times by playing video games for 15-20 minutes. I ended up playing video games for approximately 30-90 minutes a day and was able to be fully productive for more than 16 hours a day for the duration of the experiment.

I did not measure my sleepiness. However, for the entire duration of the experiment I had to resist regular urges to sleep and on several occasions when I did not want to play video games was very close to failing the experiment, having at one point fallen asleep in my chair and being awakened a few minutes later by my wife. This sleep schedule was extremely difficult to maintain.

Lack of effect on cognitive ability is surprising and may reflect true lack of cognitive impairment, my desire to demonstrate lack of cognitive impairment due to chronic sleep deprivation and lack of blinding biasing the measurements, lack of statistical power, and/or other factors.

I believe that this experiment provides strong evidence that I experienced no major cognitive impairment as a result of sleeping 4 hours per day for 12-14 days and that it provides weak suggestive evidence that there was no cognitive impairment at all.

I plan to follow this experiment up with an acute sleep deprivation experiment (75 hours without sleep) and longer partial sleep deprivation experiments (4 hours of sleep per day for (potentially) 30 and more days).

I’m wary of generalizing my results to other people and welcome independent replications of this experiment.

All scripts I used to perform statistics and to draw graphs, all raw data, and all configuration files for Aimgod (costs $5.99) and for Inquisit 6 Lab (has a 30-day trial) necessary to replicate the experiment are available on GitHub. Pre-analysis plan is available here and on GitHub.

Introduction

The cumulative cost of additional wakefulness: dose-response effects on neurobehavioral functions and sleep physiology from chronic sleep restriction and total sleep deprivation Van Dongen H, Maislin G, Mullington JM, Dinges DF. The cumulative cost of additional wakefulness: dose-response effects on neurobehavioral functions and sleep physiology from chronic sleep restriction and total sleep deprivation. Sleep. 2003 Mar 1;26(2):117-26. is a study published in journal Sleep in 2003 by Hans P.A. Van Dongen, Greg Maislin, Janet M. Mullington, and David F. Dinges.

Since then it has become one of the most well-known experiments in sleep science, accumulating more than 2700 citations and having its findings enter the popular culture. In particular, it appears that the idea that people do not notice the cognitive impairment due to sleep deprivation and that sleeping 6 hours a night for two weeks is enough to produce significant cognitive impairment both originate from this study.

Intrigued by the study’s findings and having my interest in sleep science heightened by my investigation into Matthew Walker’s Why We Sleep, I decided to attempt to partially replicate and extend it.

There’s not much more to say about the prior literature on the subject as most of it is extremely low quality. In particular, I have been able to find exactly one pre-registered experiment of the impact of prolonged sleep deprivation on cognition. It was published in 2020 by economists from Harvard and MIT and found null effects, if data is analyzed according to the authors’ pre-analysis plan.

Methods

Intervention

I gave myself a sleep opportunity of 8 hours (23:30-07:30) for 7 days prior to the experiment to make sure I don’t have any sleep debt carried over. My average sleep duration in these days (2020-03-27 to 2020-04-02) was 7.78 hours (standard error: 0.15 hours).

I slept for 4 hours a night (3:30-7:30) for 14 days (one of the experimental conditions in Van Dongen et al. 2003), with all other sleep being prohibited, and compared my cognitive abilities on days 12, 13, and 14 of sleep deprivation (treatment) to my normal state (control). I did not test myself every day because the cognitive deficits in the 4-hour condition were increasing monotonically in Van Dongen et al., and I was only interested in the most severe consequences of sleep deprivation.

I remained inside for the duration of the experiment. I worked, played video games, watched movies, browsed the internet, read, walked around the apartment, but did not engage in any vigorous activities. I avoided direct sunlight and turned off all lights during sleep times.

I did not use any caffeine, alcohol, tobacco, and/or medications in the 2 weeks before the experiment or during the experiment.

I only ate from 12pm to 8pm for the 2 weeks before the experiment and continued this schedule during the experiment. My diet was not otherwise restricted.

I do not have any medical, psychiatric or sleep-related disorders, aside from occasionally experiencing stress-related strain in my chest, and I did not experience any unusual symptoms during the experiment.

I worked neither regular night nor rotating shift work within the past 2 years. I have not travelled across time zones in the 3 months before the experiment.

Cognitive tests

I performed 3 tests: Psychomotor Vigilance Task, a custom Aimgod scenario (guzey_arena_0), and SAT.

Figure 4. Clips of tasks I performed in the experiment. Left: PVT. Center: guzey_arena_0. Right: SAT. Created with ezgif.com.

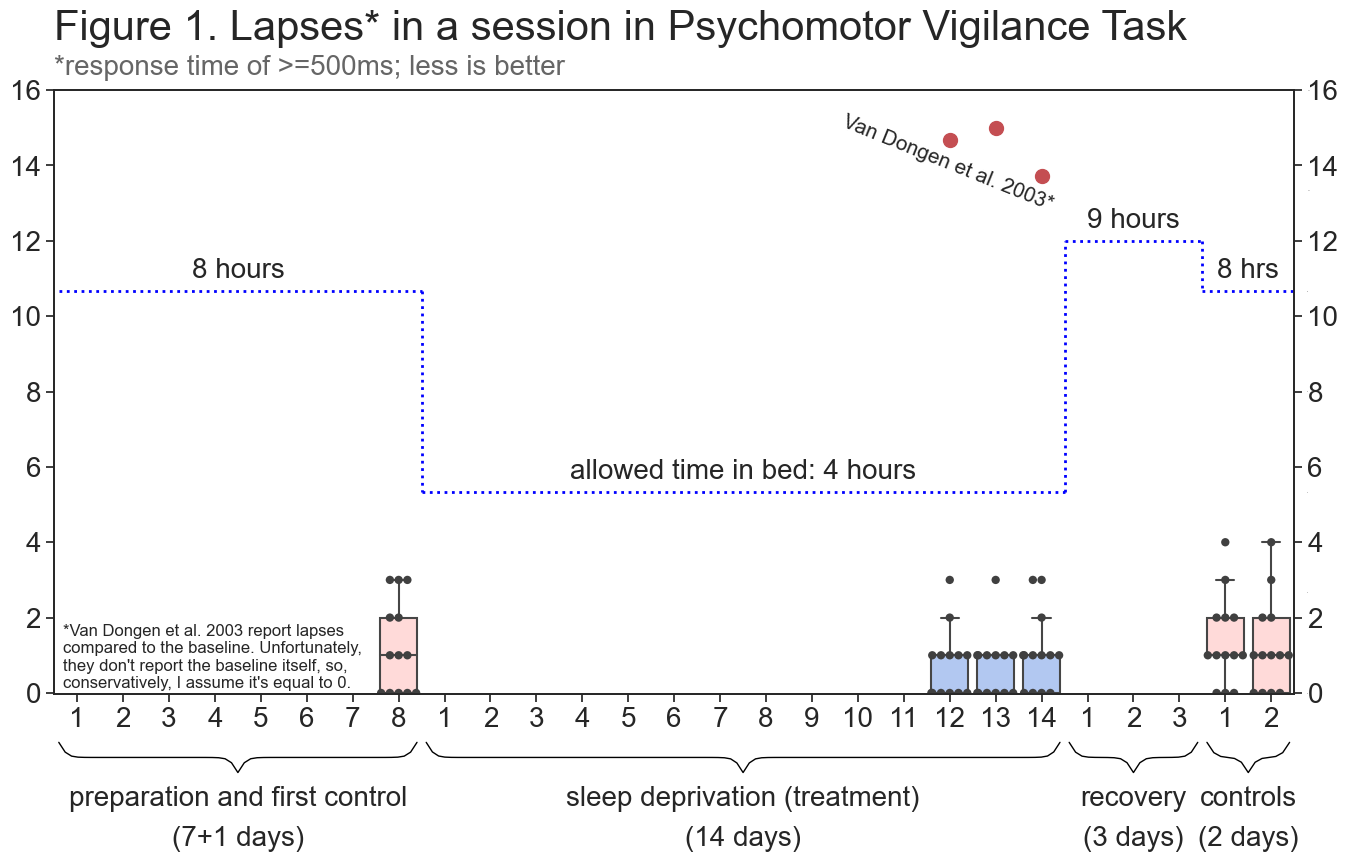

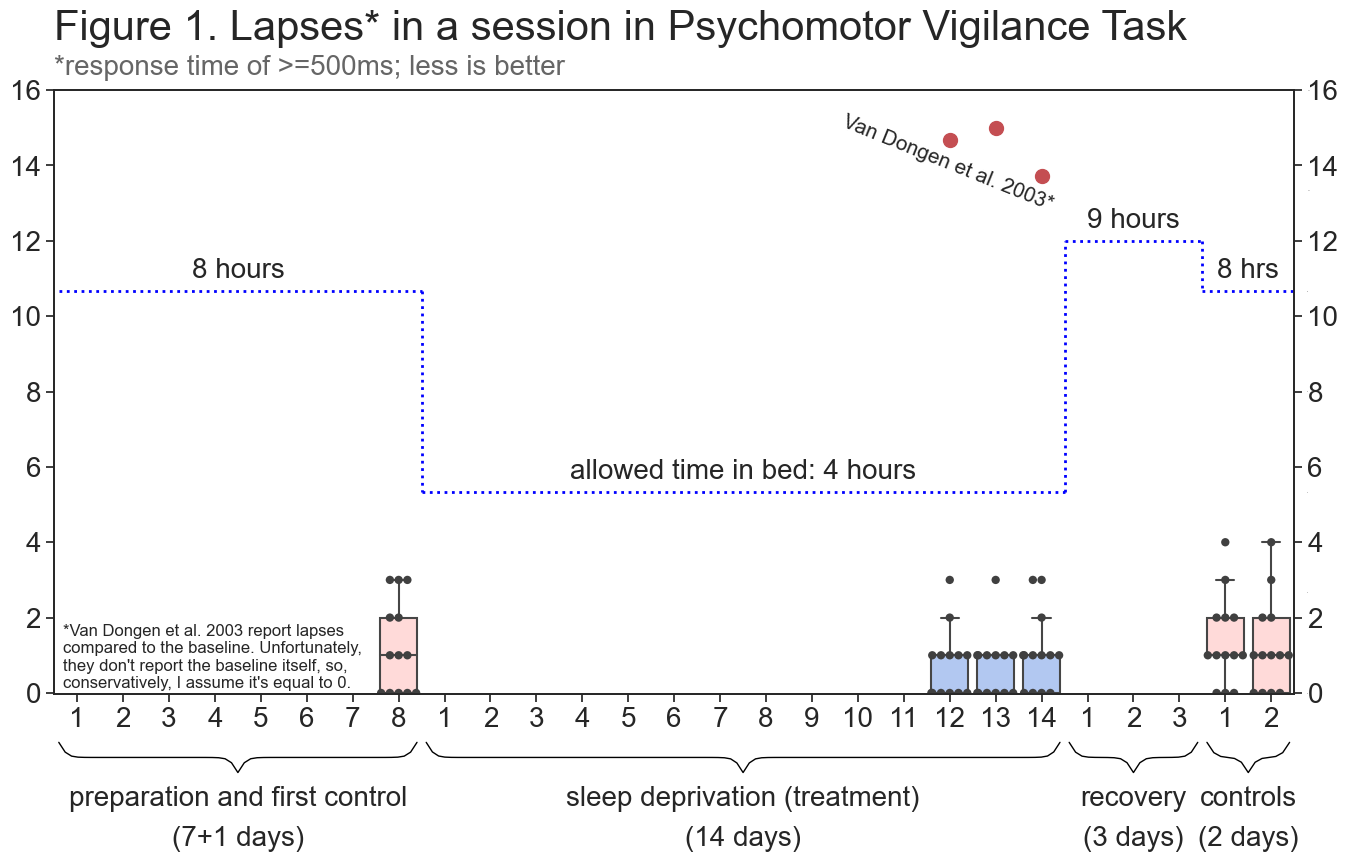

Psychomotor Vigilance Task is essentially a 10-minute-long reaction test that has become a standard way to assess wakefulness in sleep research. The standard way to analyze PVT data is to count the number of lapses (trials in which response time was >=500ms) in a session. This is how I analyzed the data.

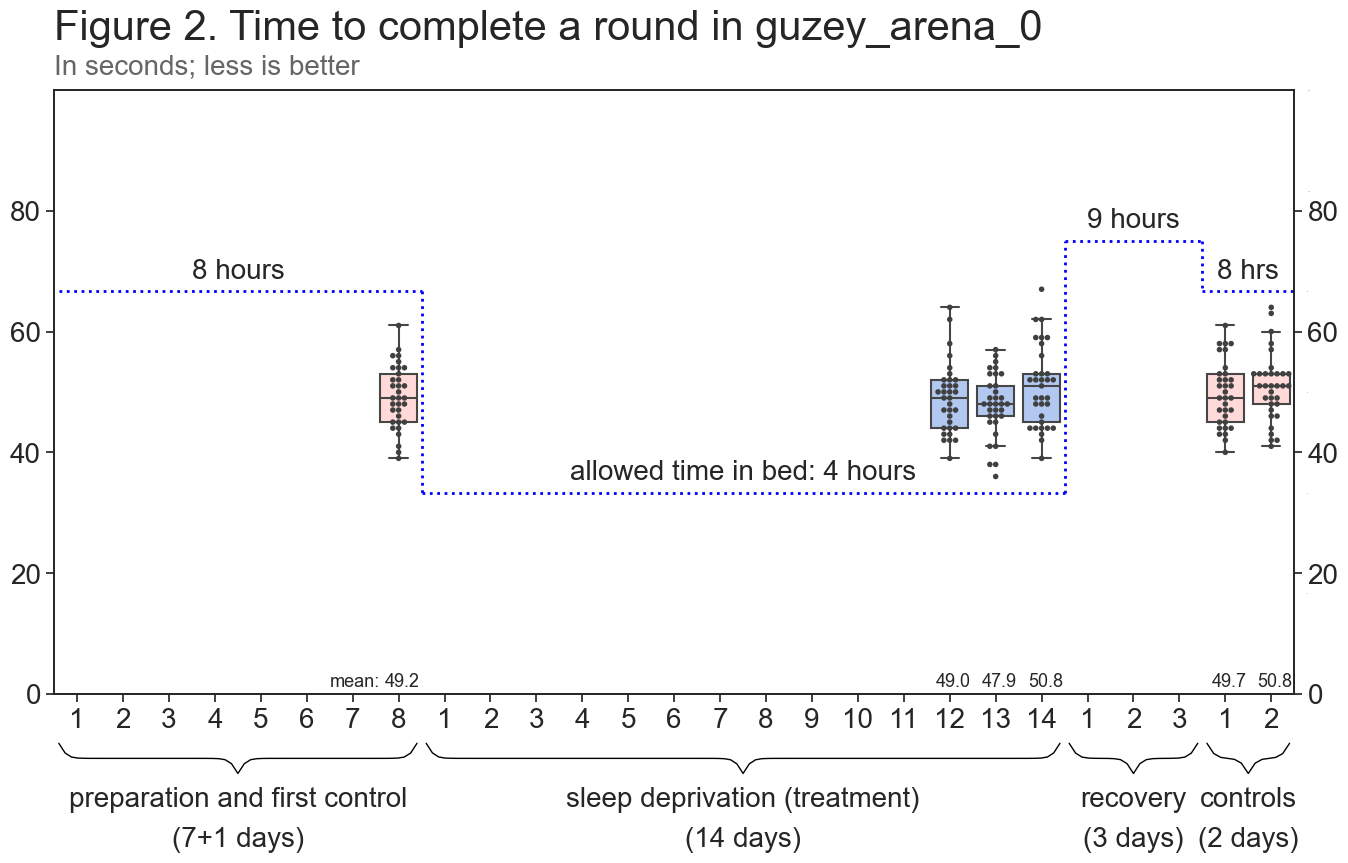

guzey_arena_0 is a first-person shooter scenario in which the player has to kill 20 bots running around the arena as quickly as possible (10 bots are present at a time and they respawn in a random location on kill). The scenario requires a mix of quick reaction time (bots frequently change directions and appear in random locations), tactical thinking (you have to choose which bot to pursue and where to look for them), and fine-motor skills (you have to move your around using the keyboard and aim accurately using the mouse).

guzey_arena_0 is the test I care the most about. I believe it’s superior to PVT for several reasons:

- I believe it is more externally valid for me. guzey_arena_0 is much more engaging than PVT and mirrors the activities I commonly engage in (writing, coding, playing video games) much better. PVT might be a good model of boring tasks like long-distance driving, but I have few such tasks day-to-day (and don’t drive at all)

- crucially, it requires actual thinking, rather just reaction time and is thus better suited to assess the impact on complex cognitive abilities

- it tests several cognitive and motor skills at the same time, so a deterioration in any of those three abilities will be picked up by the test

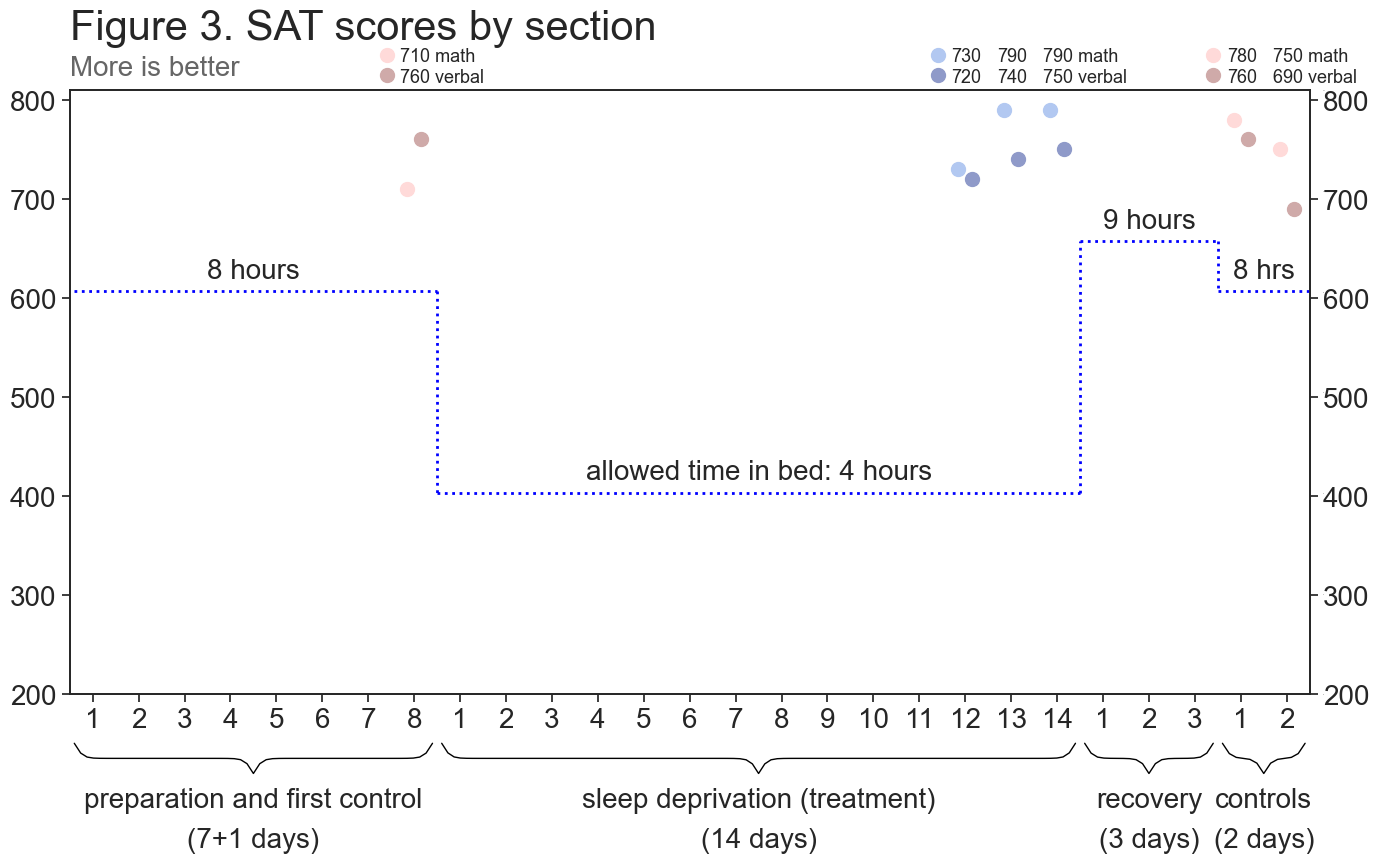

The SAT is a 3-hour-long composite test that consists of verbal (text comprehension, grammar, etc.) and math problems. It is the standard way to assess college preparedness in the US and is taken by million of high school students every year.

Data collection and statistical analysis

I took control measurements 1 day before starting sleep deprivation and on days 4 and 5 after I ended sleep deprivation. I took treatment measurements on days 12, 13, and 14 of sleep deprivation.

Data collection, as well as my strategy to deal with practice effects is described in detail in pre-analysis plan (PAP). All deviations from PAP are described in Discussion.

I performed paired t-tests for PVT and for guzey_arena comparing the means of treatment and control conditions at 0.025 significance level (0.05 significance level, Bonferroni-corrected for 2 comparisons) against a two-sided alternative.

In PAP, I wrote:

I will perform two two-tailed t-tests to compare means for both hypotheses at alpha=0.025.

I did not mention that t-tests will be paired t-tests, because I did not realize that t-tests for independent samples and for paired samples are different.

In PAP, I calculated the required sample sizes for PVT and for guzey_arena_0 to be able to detect an effect-size of 0.8 and 0.5 respectively, at 0.025 significance level and 0.895 power (0.895 power ensures that I achieve 0.8 joint power (probability to correctly reject both null hypotheses if they’re both false)).

The calculated sample size was 39 pairs of observations for PVT and 99 pairs of observations for guzey_arena_0. These are the sample sizes I collected.

However, these sample size calculations were incorrect. Because the power of a paired t-test is higher than of an independent samples t-test, it turned out that the effect-sizes I was able to detect for these sample sizes was 0.58 for PVT and 0.35 for guzey_arena_0 (at 0.025 significance level and 0.895 power).

Figure 5. Left: Cohen's d of 0.58. Right: Cohen's d of 0.35. Created with R Psychologist.

I am not performing any statistics on the SAT data, in light of extremely small number of observations.

I feel slightly weird that I’m only correcting for 2 comparisons, given that I’m discussing the SAT results, even though I’m not performing any statistics on them.

Results

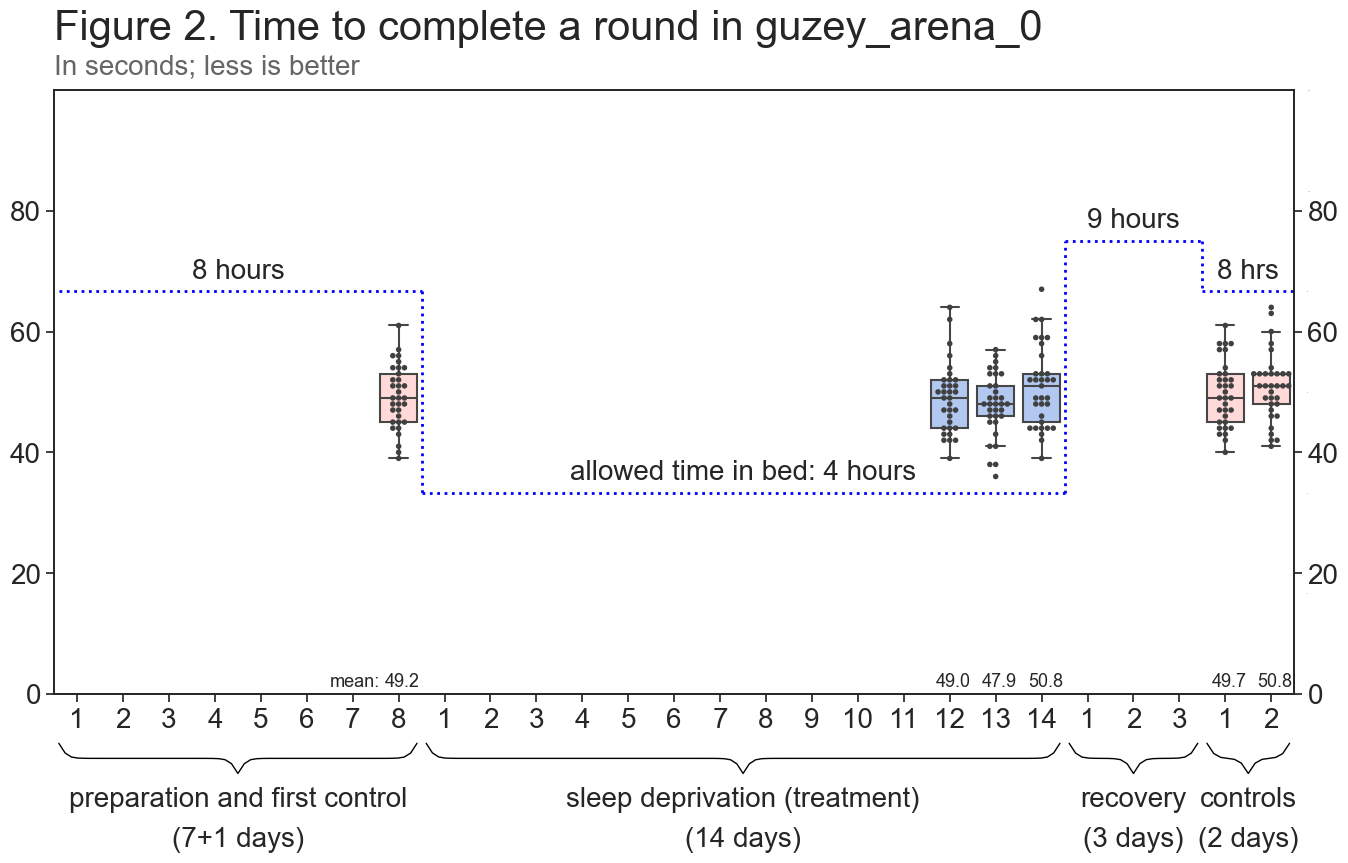

PVT

Control mean: 1.34; treatment mean: 1.12 (less is better).

Control median: 1.0; treatment median: 1.0 (less is better).

Control standard error: 0.12; treatment standard error: 0.11.

p-value: 0.18; t-value: 1.35. Not significant.

Summary: There was no statistically significant difference in my PVT performance between control and treatment conditions.

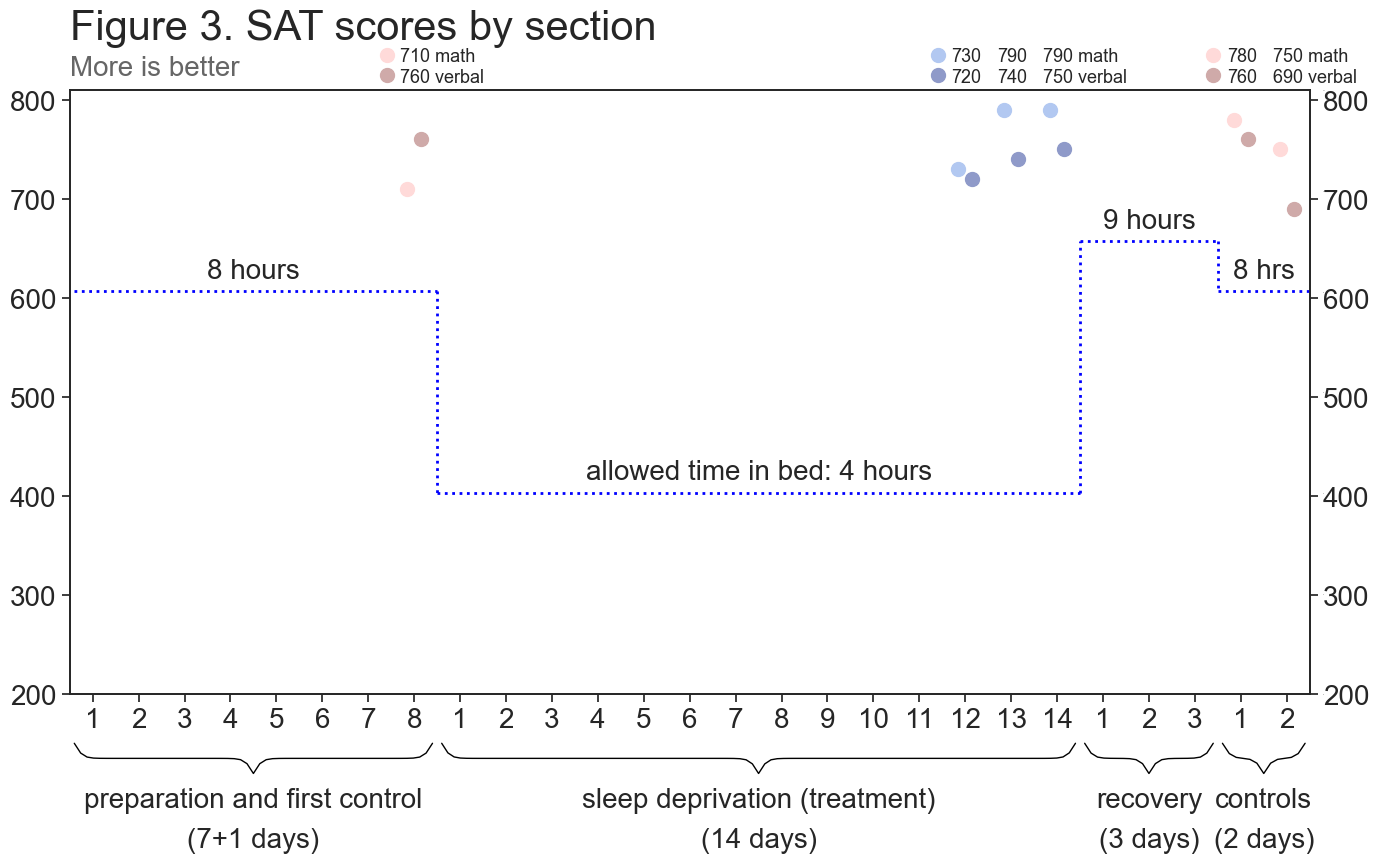

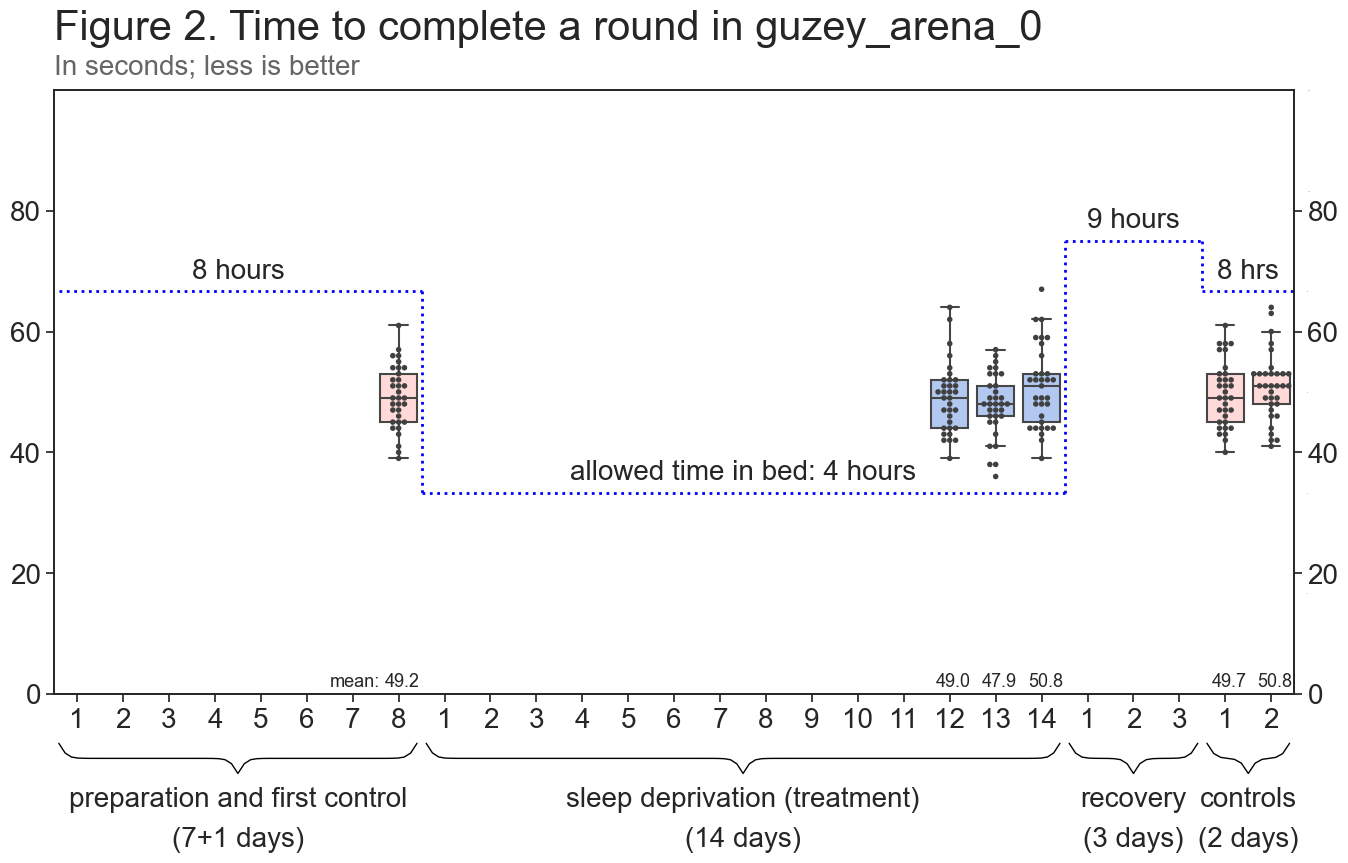

guzey_arena_0

Control mean: 49.91; treatment mean: 49.24 (less is better).

Control median: 50.0; treatment median: 49.0 (less is better).

Control standard error: 0.54; Treatment standard error: 0.6.

p-value = 0.382; t-value = 0.879. Not significant.

Summary: There was no statistically significant difference in my guzey_arena_0 performance between control and treatment conditions.

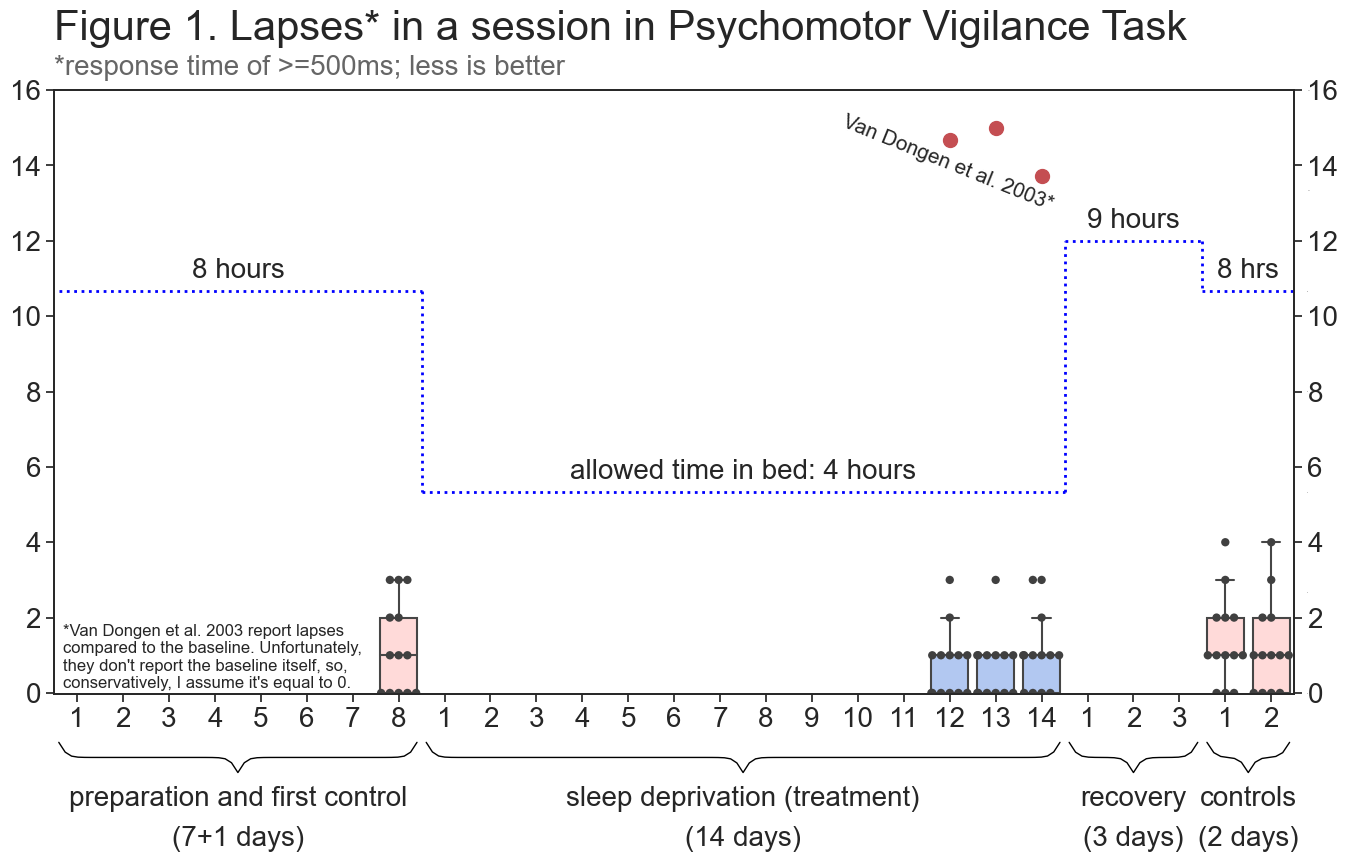

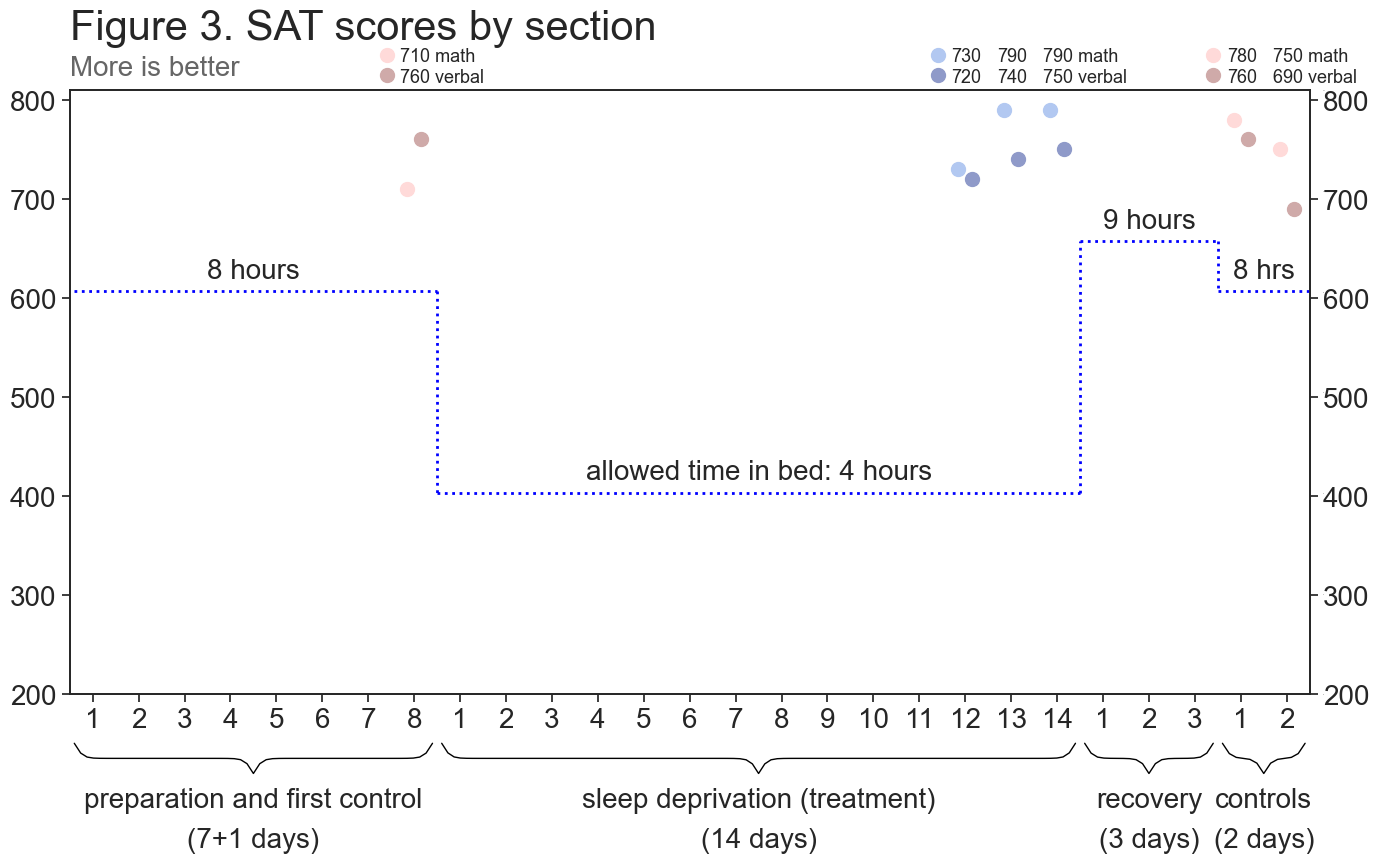

SAT

Control scores (math,verbal): (710,760), (780,760) (750,690); treatment scores (math,verbal): (730,720), (790,740), (790,750) (more is better).

Discussion

In PAP, I wrote:

My key hypothesis is that the number of lapses on PVT increases because it’s a boring as hell task. It’s 10 minutes just staring into the computer waiting for the red dot to appear periodically. So I think that sleep deprived are just falling asleep during the task but are otherwise functioning normally and their cognitive function is not compromised. Therefore, I expect that my PVT performance will deteriorate but Aimgod performance will not, since this is an interesting task, even though it primarily relies on attention for performance.

PVT

My first hypothesis was not confirmed. There was no difference in my PVT performance between control and treatment conditions. I thought that during the test I would start falling asleep and fall into microsleeps but it seems that video games – which I played whenever I was feeling too sleepy – stimulated me to the point that even doing a boring 10-minute-long test was not enough to induce microsleeps.

I believe that the quality of my PVT data is not very high. First, while I planned to take PVT at 7:40, 13:00, 13:20, 13:40, 16:00, 16:20, 16:40, 19:00 19:20, 19:40, 22:00, 22:20, 22:40, sometimes I forgot about it and sometimes I was busy during the designated time. As a result, even though I took the vast majority of tests within 5-10 minutes of designated time (largest delay: 22 minutes), I did not take PVT exactly on time. It could be argued that I strategically delayed my PVT sessions in order to take the test when I start feeling more alert. Further, I was extremely bored in the last 2 days of taking control measurements and sometimes got distracted and lost in thought, which resulted in me getting 3 and 4 lapses per session (as can be seen on Figure 1). I do not believe that these lapses represent my lack of alertness. Finally, I had to change the timing of last 3 tests on the last treatment day from 22:00, 22:20, 22:40 to 21:00, 21:20, 21:40 because I was going to bed at 22:00 and I did not account for this when writing the protocol (all such changes can be found here).

To conclude, I believe that PVT data strongly suggests that I was able to control my level of sleepiness for the duration of the experiment but I don’t think much else can be concluded from it. This interpretation seems to me to be robust, but I welcome any further criticisms that might challenge it.

Why such big difference compared to Van Dongen et al. 2003?

I’m not sure. I don’t think that I have any mutations that make me need less sleep, given that I sleep 7-8 hours normally. I believe that the most plausible explanation is that my cognitive performance was just not affected by chronic loss of sleep that much.

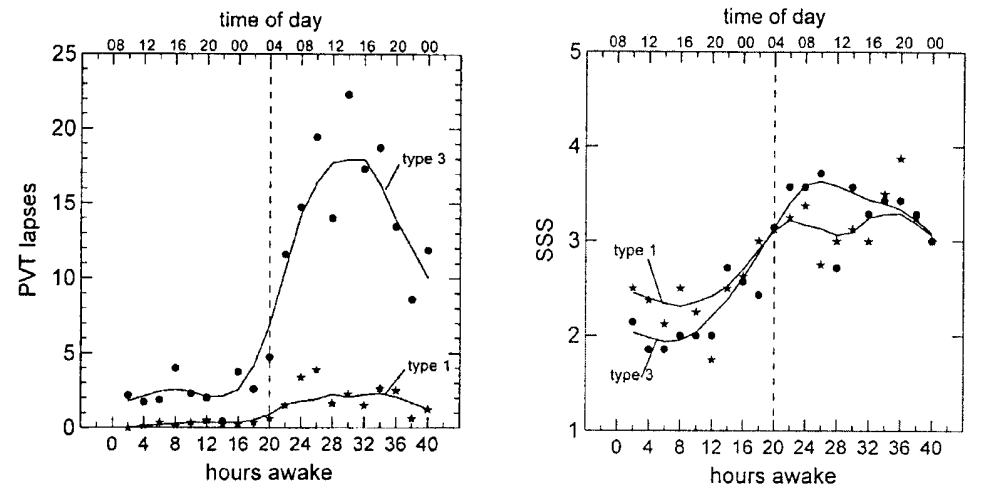

It seems that there’s very high variation in how people respond to sleep deprivation. For example, Van Dongen et al. 2004 Van Dongen H, Maislin G, Dinges DF. Dealing with inter-individual differences in the temporal dynamics of fatigue and performance: importance and techniques. Aviation, space, and environmental medicine. 2004 Mar 1;75(3):A147-54. (a different paper by the same first author) find that:

As measured by performance lapses on a psychomotor vigilance task (13), some subjects were found to have much greater impairment from sleep loss than others (Fig. 1, left-hand panel). Thus, some subjects seemed to be relatively vulnerable to performance impairment due to sleep loss, while others seemed to be relatively resilient. Interestingly, vulnerable and resilient individuals did not differ significantly in the amount of sleep they felt they needed or routinely obtained, as assured by surveys and daily diaries. This suggests that inter-individual differences in vulnerability to sleep loss are not just determined by differences in sleep need (42).

Figure 6. Neurobehavioral performance lapses on a psychomotor vigilance task (PVT; left-hand panel) and subjective sleepiness score on the Stanford Sleepiness Scale (SSS; right-hand panel) during 40 h of total sleep deprivation in a laboratory environment (11). Stars show PVT performance lapses and SSS sleepiness scores for the eight subjects most resilient to sleep loss (type 1); dots show the data for the 7 subjects most vulnerable to sleep loss (type 3). Despite the considerable difference in psychomotor performance impairment, there was no statistically significant difference in the profile of sleepiness scores between these two groups. Reprinted from Van Dongen et al. 2004.

guzey_arena_0

My second hypothesis was confirmed. There was no difference in my guzey_arena_0 performance between control and treatment conditions.

I believe that the quality of my guzey_arena_0 data is high. I took tests in the same environment and was virtually always fully focused on them. Like with PVT, I performed the tests only approximately at the planned time (almost always within 5-10 minutes of the scheduled time). Also like with PVT, I had to change the time I took some of the tests at because I forgot to account for me going to bed earlier in the last 6 days of the experiment (all such changes can be found here). During one of the treatment sessions I accidentally took 12 samples. I discovered that I miscounted the number of samples I took in that session when I was extracting the data from the video and did not include sample 12 in the data, since the paired sample t-test I use relies on data being matched and my plan was to collect precisely 11 samples in each session. Even when including that sample, the control mean remains non-significantly higher than treatment mean.

To conclude, I believe that guzey_arena_0 strongly suggests that there was no major cognitive deterioration in the treatment condition. I believe that it provides moderate evidence that there was no moderate cognitive deterioration in the treatment condition. I believe that it is weakly suggestive evidence that there was no cognitive deterioration at all in the treatment condition.

SAT

In contrast to guzey_arena_0, the SAT has an absolute ceiling (800 on each section), which I know I’m able to approach (at least for the math section), thus creating an implicit control condition and making it immune to the effect of me consciously or unconsciously trying to suppress my control performance. This means that hitting a score in the vicinity of 800 on the math section and somewhere between 700 and 750 on the verbal section is a pretty good evidence of lack of major cognitive deterioration.

I ended up getting (730,720), (790,740), and (790,750) on (math,verbal) sections of SAT on days 12, 13, and 14 of treatment and getting (710,760), (780,760), and (750,690) on control days. The first low control score on the math section is a result of lack of practice and me running out of time after getting stuck on a question. The last low control score on the verbal section is a result of me becoming very bored with taking the SAT 5 days in a row, getting lost in thought for 10 minutes, and having to rush.

To conclude, I believe that the SAT data strongly suggests that there was no major or moderate cognitive deterioration in many aspects of my cognition, given that test includes challenging (to me) reading comprehension questions and requires quick mathematical thinking. I believe that it provides barely any evidence regarding minor cognitive deterioration, given that I usually end up having free time at the end of every section, which I use to double check my responses, and minor cognitive deterioration could result in me getting roughly the same score but with less time left.

Subjective data

I was fully alert (very roughly) 85% of the time I was awake, moderately sleepy 10% of the time I was awake, and was outright falling asleep 5% of the time. Subjectively, I felt that I gained somewhere between 2 and 3 hours of productive time every day.

Further, it appears that my sleep debt was not increasing over time. My impression is that during acute sleep deprivation, as one doesn’t sleep for 70+ hours, most stimulation ceases to work, meaning that video games are no longer able to bring one back to full alertness. In contrast to that, I found that I was able to go from “falling asleep” to “fully alert” at all times by playing video games for 15-20 minutes. I ended up playing video games for approximately 30-90 minutes a day.

Finally, even though I felt no cognitive deterioration and no accumulating sleep debt, maintaining this sleep schedule was incredibly hard. I had to resist regular urges to sleep and on several occasions when I did not want to play video games was very close to failing the experiment, having at one point fallen asleep in my chair and being awakened a few minutes later by my wife. Occasionally, when I tried to see if I could regain full alertness without video games, I would fall into a series of several-seconds-long microsleeps. I do not feel that I would’ve been able to maintain this schedule for these 14 days, had I not committed so hard to it by spending so much time designing it and publicly announcing my experiment.

A call with a friend on day 10 of treatment

In the evening of day 10 of treatment I had a call with a friend whom I talk to regularly. We spent an hour discussing strengths and weaknesses of several academic papers. For the majority of the conversation I was functioning at the peak of my ability, having to constantly engage with complex arguments and to do a lot of thinking on the fly, which taxed my long-term memory, short-term memory, logical thinking, and speaking ability. After the call I asked that friend if he noticed that I’d been sleeping for 4 hours a night for 10 days. He wrote:

lol

Did not notice

A conversation with a roommate on day 2 of recovery

My roommate, who knew about my experiment, asked me how long I will continue to sleep for 4 hours a night after I had 2 nights of recovery sleep, meaning that he did not notice any significant changes in my state after I stopped being sleep deprived. I usually talk to him for 30-60 minutes a day.

Limitations

Lack of blinding

I like being awake and I do not like sleeping. Thus, I was motivated to find the absence of effect of sleep deprivation on cognition. This might have resulted in me trying less hard in the control condition, compared to treatment condition. Unfortunately, I do not know how to blind myself to the amount of sleep I get. I discussed the lack of blinding in the context of this experiment in subsection SAT.

Inability to detect subtle changes in cognitive ability

I care about even the smallest changes in my cognitive ability due to sleep deprivation. For example, if the effect-size is “only” 0.1, I still care about it. However, in order to detect such effect in this experiment, I would have had to spend more than 40 hours collecting 1224 pairs of observations for guzey_arena_0.

The situation would not look much better if I ditched PVT and only performed guzey_arena_0. In this case, I would need to collect 787 pairs of observations to detect effect-size of 0.1 at 0.05 alpha and 0.8 power.

Figure 6. Cohen's d of 0.1. Created with R Psychologist.

Health

Although I did not experience any physical deterioration during the experiment, I did not track my health very rigorously.

It might be possible that sleeping 4 hours a night is unhealthy for the body or unhealthy for the mind over the long-term but the damage accumulates slowly and could not be noticed over a 14-day experiment.

Notes

- I spent approximately 35 hours collecting the data for the experiment. ~13 hours for PVT, ~3 hours for guzey_arena_0, and ~19 hours for the SAT

- I spent >20 hours creating the plots. f

- when creating graphs I had to enter dummy data points into all datasets in order to fill the x axis of graphs. I only realized that I also entered dummy data for days where I had real data and that dummy data started mixing with real data when I started creating the SAT graph as dummy data got within the bounds of that graph’s y axis and I realized that it messes up my boxplots. However, I did not notice this when analyzing PVT and guzey_arena_0 data, so every day when I took measurements contained one hidden out of bounds point that slightly moved all boxplots upwards and sometimes changed medians/quartiles/etc. Had I not decided to (1) collect SAT data and (2) build graphs for SAT using the same pipeline I used for PVT and guzey_arena_0, I would not have noticed this and my graphs would’ve been slightly off.

Acknowledgements

I would like to thank Gleb Posobin, Drake Thomas, and Nabeel Qureshi for reading drafts of this study. I would like to especially thank Anastasia Kuptsova (for waking me up when I fell asleep during the day) and Misha Yagudin (for improving the plots immeasurably and many other helpful suggestions),

I would like to thank Tyler Cowen’s Emergent Ventures, Kyle Schiller and Adam Canady for the financial support of my writing and research.

I would like to thank Millisecond Software for providing me with a discount for Inquisit Lab 6, which I used to perform PVT.

Citation

In academic work, please cite this study as:

Guzey, Alexey. The Effects on Cognition of Sleeping 4 Hours per Night for 12-14 Days: a Pre-Registered Self-Experiment. Guzey.com. 2020. Available from https://guzey.com/science/sleep/14-day-sleep-deprivation-self-experiment/.

Or download a BibTeX file here.

FAQ

Q:

In your November 2019 tweet announcing your Why We Sleep post you wrote:

For the last two months I’ve been sleeping for 4 hours a night

Why was this not mentioned in the post? Seems that it would provide important context for the experiment, given that your organism might have developed some sort of an adaptation to sleep deprivation.

A:

This tweet was a joke. I never slept 4 hours a night for more than a couple of days prior to the experiment. I experimented with sleep in 2019-2020 but it was not rigorous and I was never able to sleep less than 6-7 hours a day consistently. As mentioned above, when I don’t restrict sleep, I sleep 7-8 hours a night.

Future experiments

- in future long-term sleep deprivation experiments, I will measure the amount of time I play video games every day to fend off sleepiness. Then, by subtracting sleep and video games from 24 hours I would have a good estimate of the amount of time I was fully functional during the day

- I’m also going to take way fewer (if any) PVTs, and will substitute SAT for some other task – both of these take too much time

- ideas for sleep experiments

- how does time I stop eating influence how much I sleep?

- stop eating at 9pm vs 5pm randomly for say 60 days. go to bed at 11pm; wake up naturally.

- how does time I stop eating influence how much I sleep?

Pre-Analysis plan of the current experiment

Also available (with the ability to view all changes since first publication) on GitHub.

This is the protocol for my sleep experiment that I will start on 2020-04-03. I will sleep 4 hours per night for 2 weeks and evaluate the effects of doing so on my cognition using psychomotor vigilance task (essentially, a reaction test), SAT (a 3 hour test that involves reading and math), and Aimgod custom scenario I called guzey_arena_0 (video) (this is a first-person shooter trainer game that allows the creation of custom scenarios. The scenario I created requires constant attention, eye-hand coordination, tactical thinking).

Methods

(inspired by Van Dongen et al 2003 https://academic.oup.com/sleep/article/26/2/117/2709164)

I’m going to remain inside throughout the experiment and my behavior will be monitored by my wife between 07:30-23:30 every day and will not be monitored between 23:30-03:30. I will check in in a google sheet every 15 minutes during that time to confirm that I’m not sleeping. I will work, play video games, watch movies, browse the internet, read, walk around the apartment, but will not engage in any vigorous activities. I will avoid direct sunlight and will turn off all lights during sleep times. I did not use any caffeine, alcohol, tobacco, and/or medications in the 2 weeks before the experiment. I do not have any medical, psychiatric or sleep-related disorders, aside from occasionally experiencing stress-related strain in my chest, and I will write down any unusual symptoms I experience during the experiment. I worked neither regular night nor rotating shift work within the past 2 years. I have not travelled across time zones in the 3 months before the experiment.

Structure of the experiment

Days -7 to -1 (2020-03-27 to 2020-04-02): adaptation.

I will give myself a sleep opportunity of 8 hours (23:30-07:30) for 7 days prior to the experiment to make sure I don’t have any sleep debt carried over. My average sleep duration in these days (2020-03-27 to 2020-04-02) was INPUT with the standard deviation of INPUT.

Day -1 (2020-04-02): practice

I will give myself a sleep opportunity of 8 hours (23:30-07:30). I will practice the tasks I’m going to use in order to mitigate the most extreme practice effects during the experiment. I will perform all tasks as follows:

- PVT at 07:30, 13:30, and 19:30.

- SAT at 07:40

- Aimgod guzey_arena_0 at 12:30-12:50, 15:30-15:50, 18:30-18:50 and 21:30-21:50 (I also played about 2 hours of guzey_arena_0 on 2020-03-31 and 2020-04-01 while designing the experiment)

Days 1 (2020-04-03), 19 (2020-04-21), 20 (2020-04-22): control.

I will give myself a sleep opportunity of 8 hours (23:30-07:30). I will perform all tasks on these days as follows:

- PVT at 7:40, 13:00, 13:20, 13:40, 16:00, 16:20, 16:40, 19:00 19:20, 19:40, 22:00, 22:20, 22:40

- SAT at 07:50

- Aimgod 11 sessions each at 12:00, 18:00, and 23:00

Days 2 to 12 (2020-04-04 to 2020-04-14): sleep deprivation

I will give myself a sleep opportunity of 4 hours (03:30-07:30). I will not test myself on days 2 to 12.

Days 13-15 (2020-04-15 to 2020-04-17): sleep deprivation, testing

I will perform all tasks on days 13, 14, 15 as follows:

- PVT at 7:40, 13:00, 13:20, 13:40, 16:00, 16:20, 16:40, 19:00 19:20, 19:40, 22:00, 22:20, 22:40 (on day 15 I’m going to bed at 22:30, so the last PVT tests will be at 21:00, 21:20, 21:40 instead of 22:00, 22:20, 22:40)

- SAT at 07:50

- Aimgod 11 sessions each at 12:00, 18:00, and 00:00 (on day 15 I’m going to bed at 22:30, so the last Aimgod test will be at 22:00)

Days 16-18 (2020-04-18 to 2020-04-20): recovery

I will give myself a sleep opportunity of 9 hours (22:30-07:30). I will not test myself on days 16-18.

Rationale behind sample sizes and data analysis

I will test two statistical hypotheses in the study:

- number of lapses on PVT (defined as response time >500ms) is different between control and experimental conditions

- time to finish the scenario (i.e. time to kill 20 bots) in guzey_arena_0 is different between control and experimental conditions

Effect sizes sleep-deprived number of lapses on PVT have previously been reported to be huge. In particular, Basner and Dinges 2011 report:

Effect sizes were high for both TSD (1.59–1.94) and PSD (0.88–1.21) for PVT metrics related to lapses and to measures of psychomotor speed

with PSD meaning that participants were allowed to sleep for 4 hours a night for 5 days. Studies show that the effect increases monotonically with an increase in the length of sleep deprivation (e.g. Van Dongen et al 2003), so we expect that the effect of sleeping for 4 hours a night for 2 weeks will be larger than this.

To be on the safe side, I’m going to collect enough PVT samples (n=39 control; n=39 experimental) to detect an effect size of 0.8 with 97.5% confidence level and 89.5% power.

I will collect enough Aimbot samples (n=99 control; n=99 experimental) to detect an effect size of 0.5 (medium-size effect) with 97.5% confidence level and 89.5% power.

Note that 97.5% confidence level is standard 95% confidence level Bonferroni corrected for 2 comparisons and that 89.5% ensures that we achieve 80% joint power (probability to correctly reject both null hypotheses if they’re both false).

I expect that practice effects will accumulate monotonically with performance of SAT and Aimgod, therefore I’m trying to equalize the average practice effects between control and experimental conditions. (control: day 1 (low practice), 19 (medium practice), 20 (high practice); experimental: day 13 (medium practice), day 14 (medium practice), day 15 (medium practice))

PVT is resistant to practice effects.

Statistical tests

I will perform two two-tailed t-tests to compare means for both hypotheses at alpha=0.025.

I’m taking the SAT as a curiosity, will collect very few samples and will not perform any statistical analysis on them.

Pre-registering my hypotheses

As Krause et al 2017 write in their review for Nature Reviews Neuroscience:

One cognitive ability that is especially susceptible to sleep loss is attention, which serves ongoing goal-directed behaviour4. Performance on attentional tasks deteriorates in a dose-dependent manner with the amount of accrued time awake, owing to increasing sleep pressure5,6,7. The prototypic impairments on such tasks are known as ’lapses’ or ‘microsleeps’, which involve response failures that reflect errors of omission4,5,6. More specifically, attentional maintenance becomes highly variable and erratic (with attention being sustained, lost, re-established, then lost again), resulting in unstable task performance4

My key hypothesis is that the number of lapses on PVT increases because it’s a boring as hell task. It’s 10 minutes just staring into the computer waiting for the red dot to appear periodically. So I think that sleep deprived are just falling asleep during the task but are otherwise functioning normally and their cognitive function is not compromised. Therefore, I expect that my PVT performance will deteriorate but Aimgod performance will not, since this is an interesting task, even though it primarily relies on attention for performance.

I will record the data and will not look at it until after the data collection is over to try to minimize the effect of my expectations on my performance. I will partially be able to see my performance level at Aimgod while performing it but will try not to do it…

FAQ

Why are you not testing memory, executive function, mental rotation, creative reasoning…?

The more hypotheses I have, the more samples I need to collect for each hypothesis, in order to maintain the same false positive probability (https://en.wikipedia.org/wiki/Multiple_comparisons_problem). This is a n=1 study and I’m barely collecting enough samples to measure medium-to-large effects and will spend 10 hours performing PVT. I’m not in a position to test many hypotheses at once.